Business Brief

Streamlining Health Technology Assessments by Automating Literature ReviewsA total of 102 countries and regions use a systematic, formal decision-making process to evaluate health interventions, according to a 2020-21 survey by the World Health Organization (WHO).4 Most European nations apply some aspects of an HTA approach to decisions about pharmaceuticals, and more than two-thirds use an HTA to support decisions about other health technologies (e.g., medical devices).5 Major public and individual health and economic consequences flow from HTA findings.

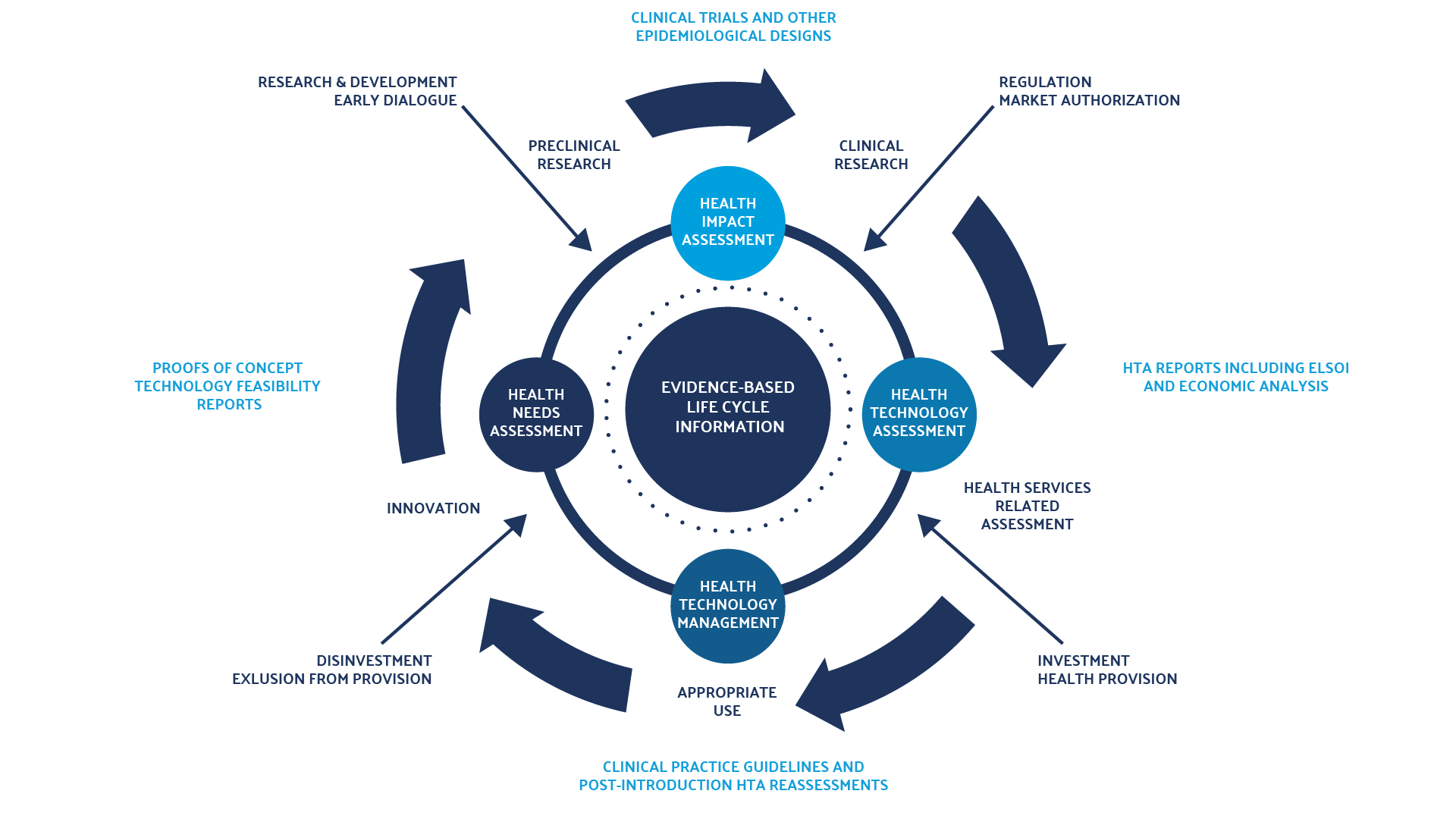

An HTA can be conducted at any point in a health intervention’s life cycle (see figure 1). It can be done before or after market introduction, or when a new indication for a marketed pharmaceutical is under consideration. As the focus on evidence-based decision-making in health care has increased, the role and scope of the HTA has evolved and demand for assessments grown. These factors create pressure to improve the efficiency of HTA processes.

HTAs and the Systematic Literature Review

Typical Steps in Systematic Reviews for HTA6

- Topic identification

- Search design (terms, time frame, etc.)

- Literature searching, screening, and retrieval

- Data extraction

- Assessment of evidence quality and bias risk

- New evidence collection or generation, if appropriate

- Data synthesis/meta-analysis

- Formulation and dissemination of findings and recommendations

Figure 1: Health Intervention’s Life Cycle

Literature gathered for an HTA can therefore cover a variety of topics, including the epidemiology and clinical burden of the disease or condition impacted by the health technology evaluated; the clinical efficacy, safety, and cost of available interventions and the intervention under evaluation; and organizational, social, and legal considerations.

Systematic reviews therefore may search not only peer-reviewed biomedical literature but also gray literature (e.g., non-peer-reviewed publications, unpublished material, government or association documents) and de-identified real-world evidence from government, nation-based, or payer claims databases describing the intervention’s clinical and cost impact on the target population.

There are acute challenges associated with HTA SLRs, which organizations are confronted with in their work.

Challenges to Developing an HTA

- Information overload

- Time constraints

- The need for transparency and accuracy

The rising number of new pharmaceuticals, medical devices, and other health innovations, along with their novelty and increasing complexity, have led to an increased workload for HTA agencies.

Human reviewers may not apply criteria for literature assessment, retrieval, data extraction, and bias evaluation consistently to each study they screen. Systematic reviews are often conducted by teams organized by subject matter expertise. Multiple professionals may apply search and assessment criteria in slightly different ways, introducing inconsistency, error, and inadvertent bias.

Only 51% of 152 reviews evaluated in a recent study reported the use of a standardized extraction form. Only 20% reported using software for study selection, and just 12% for extraction.17 Data extraction errors are common — a recent analysis of 201 systematic reviews found an error rate of 85%.18 Spreadsheets, a common tool in developing an SLR, are prone to manual data entry errors and duplicate references that can be difficult to detect.

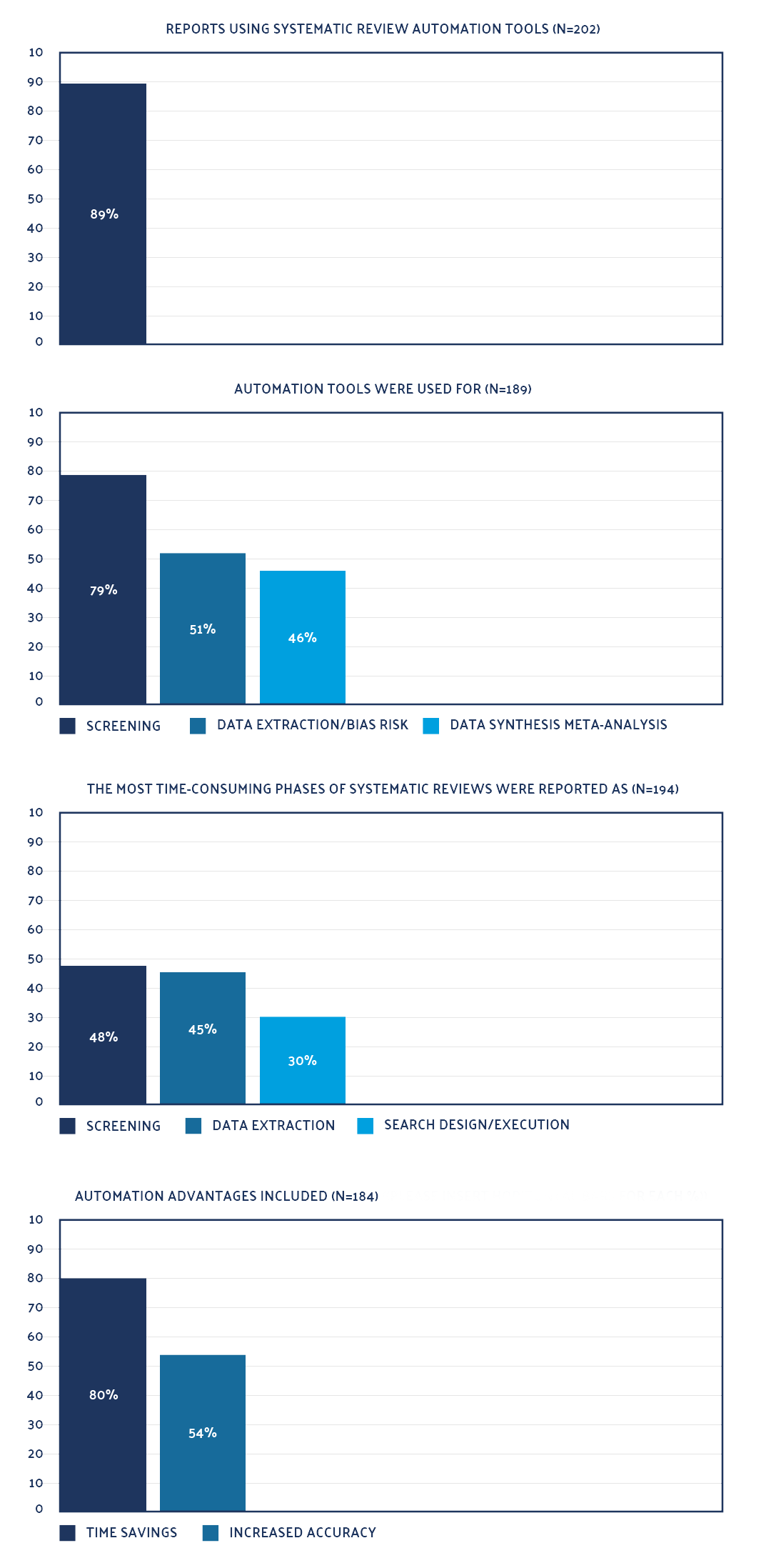

Roughly half of the respondents (51%) to the survey reported a lack of knowledge as the biggest barrier to using automation tools. Respondents suggested developing tools for literature searching and data extraction – processes for which multiple automation tools exist.21

DistillerSR, for example, automates the entire literature review life cycle, from search and screening to full-text retrieval, data extraction, and reporting. The software automates the management of literature collection, triage, and assessment using intelligent workflows. The workflow is configured based on the literature review methods of any study protocol, and the user can modify the workflow to suit their needs.

Specifically, DistillerSR automates the conduct of HTA by:

Literature searching and screening

- Imports references from native search platforms and from a variety of sources including gray literature

- Integrates with Embase, PubMed, Ovid, and EBSCO

- Automates literature searching, de-duping, and updates

- Applies AI to continuously reorder references based on relevance, so that team members are presented with those deemed most pertinent first

An example is a customer who uses the LitConnect feature on DistillerSR to reduce time spent updating searches by 50%. This allows for newly published references to be imported automatically. DistillerSR applies AI to determine inclusion and exclusion patterns, reordering references based on relevance and thereby producing a more efficient overall review process and faster completion rates.

Full-text retrieval

- Connects directly to full-text open-access and paid subscription sources

DistillerSR allows customers to focus on key tasks, such as capturing high-quality data from the literature, while the administrative tasks, such as copyright management, are conducted by integrated software partners in the platform. DistillerSR’s integration with these partners allows access to free full-text or purchased subscriptions, further reducing time spent searching for articles while lowering overall literature costs.

Data extraction and bias assessment

- Validates data before submission to avoid errors

- Converts data with built-in calculations

- Automates compilation of tables

An example of DistillerSR’s automation of data extraction is its ability to capture and analyze complex data such as time points across multiple studies. All the collected data can be analyzed, shaped, and exported using the data reporting tool, either in real time or as a scheduled job.

Advantages of Automation for Systematic Reviews in HTAs

- Speed

- DistillerSR reduced the title/abstract screening burden by a median of 40.6% and saved a median of 29.8 hours, in one study.22

- Accuracy

- A study examined DistillerSR for accuracy in auditing excluded references, previewing the predictions of unscreened references, and screening references based on the predictions. Software choices were compared with those made by two independent human reviewers. Most (92% to 99%) AI decisions were correct. Variation in cost-effectiveness models was 1% to 3%; in randomized controlled trials, variation was between 1% and 5%. These ranges are similar to the human reviewer’s margin of error,23 but on average 50-60% faster. Automation also avoids manual entry errors.

- Transparency

- By recording inclusion/exclusion decisions, DistillerSR enables the provision of data for audits, transparency, and reproducibility.

- Results from each stage of the review process are displayed in a PRISMA 2020 flow chart that reports sources searched, references screened, and inclusion/exclusion decisions.

- By tracking all review activity, DistillerSR makes it easy to view the provenance of each data cell.

Multiple teams within HTA agencies work together to develop assessments, and many HTA agencies outsource to external groups.24 As a web-based platform, DistillerSR acts as the nerve center for systematic review for HTA projects, making data accessible to all team members across different, often global, locations for analysis. It tracks which references have been screened and what data has been extracted to avoid duplication of effort.

It can classify references and assign them to the relevant subject matter expert for screening via a standard workflow configured by the project’s administrator. It also notifies relevant team members when new references are available for screening.

DistillerSR also has a module called CuratorCR. Integrated seamlessly with DistillerSR, CuratorCR is a research knowledge center that centrally and dynamically manages an organization’s evidence-based research, allowing you to continuously collect, update, share, and reuse its data. As a result, CuratorCR eliminates re-analyzing and extracting the same data from already screened and processed references throughout an organization — speeding screening and data extraction times while reducing overall subscription costs for references. Moreover, teams can create segmented project or subject-based databases consisting of previously collected data for reuse in other reviews, or for joint research consortia between private and public sector organizations.

The data reuse approach is timely. A movement in Europe toward joint clinical assessments and scientific consultations for the most technical and demanding innovations places a premium on technology or structures that facilitate collaboration across HTA bodies. National HTA agencies often evaluate the same technologies within the same time frame, resulting in duplicate effort. The Council of the EU and the European Parliament have adopted the EU HTA Regulation, which “aims to harmonize methodological standards and to foster collaboration among European HTA bodies.”25

New oncology medications and advanced therapy medicinal products will be assessed jointly as of 2025, though each country makes the final HTA appraisals and reimbursement decisions. Orphan medical products will be jointly assessed as of 2028.

Software such as DistillerSR and its add-on module CuratorCR, which enable the integration of assessments by the EUnetHTA 21 consortium with the systems of member states, could only be beneficial.

Automating some of the most time-consuming and error-prone functions of a systematic review can increase the efficiency and accuracy of HTA development at a time when HTA agencies are under pressure to produce more and better-quality assessments. Literature review automation software that facilitates collaboration is especially desirable in this team-based industry where outsourcing is common.

- O’Rourke B, Oortwijn W, Schuller T. Announcing the new definition of health technology assessment. Value Health. 2020;23(6):824-825. DOI: 10.1016/j.jval.2020.05.001

- Kristensen FB, Husereau D, Huić M, et al. Identifying the need for good practices in health technology assessment: Summary of the ISPOR HTA council working group report on good practices in HTA. Value Health. 2019;22(1):13-20. DOI: 10.1016/j.jval.2018.08.010

- O’Rourke B, Oortwijn W, Schuller T. Announcing the new definition of health technology assessment. Value Health. 2020;23(6):824-825. DOI: 10.1016/j.jval.2020.05.001

- World Health Organization. Health Technology Assessment Survey 2020/21. Accessed October 13, 2022. https://www.who.int/data/stories/health-technology-assessment-a-visual-summary

- European Network for Health Technology Assessment (EUnetHTA). An Analysis of HTA and Reimbursement Procedures in EUnetHTA Partner Countries: Final Report. Accessed October 13, 2022. https://www.eunethta.eu/wp-content/uploads/2018/02/WP7-Activity-1-Report.pdf

- European Patients’ Academy on Therapeutic Innovation. Health Technology Assessment Process: Fundamentals. Accessed October 13, 2022. https://toolbox.eupati.eu/resources/health-technology-assessment-process-fundamentals/

- O’Rourke B, Oortwijn W, Schuller T. Announcing the new definition of health technology assessment. Value Health. 2020;23(6):824-825. DOI: 10.1016/j.jval.2020.05.001

- Schmidt L, Olorisade BK, McGuinness LA, Thomas J, Higgins JPT. Data extraction methods for systematic review (semi)automation: A living review protocol. F1000Res. 2020;9:210. DOI: 10.12688/f1000research.22781

- Zhang Y, Liang S, Feng Y, et al. Automation of literature screening using machine learning in medical evidence synthesis: a diagnostic test accuracy systematic review protocol. Systematic Reviews. 2022;11(1):11. DOI: 10.1186/s13643-021-01881-5

- Schmidt L, Olorisade BK, McGuinness LA, Thomas J, Higgins JPT. Data extraction methods for systematic review (semi)automation: A living review protocol. F1000Res. 2020;9:210. DOI: 10.12688/f1000research.22781

- European Network for Health Technology Assessment (EUnetHTA). An Analysis of HTA and Reimbursement Procedures in EUnetHTA Partner Countries: Final Report. Accessed October 13, 2022. https://www.eunethta.eu/wp-content/uploads/2018/02/WP7-Activity-1-Report.pdf

- European Network for Health Technology Assessment (EUnetHTA). An Analysis of HTA and Reimbursement Procedures in EUnetHTA Partner Countries: Final Report. Accessed October 13, 2022. https://www.eunethta.eu/wp-content/uploads/2018/02/WP7-Activity-1-Report.pdf

- National Institute for Heath and Care Excellence. Health Technology Evaluation at NICE: What Happens After the Transition Period? Accessed October 13, 2022. https://www.nice.org.uk/about/who-we-are/corporate-publications/health-technology-evaluation-at-nice–what-happens-after-the-transition-period

- World Health Organization. Health Technology Assessment Survey 2020/21. Accessed October 13, 2022. https://www.who.int/data/stories/health-technology-assessment-a-visual-summary

- European Network for Health Technology Assessment (EUnetHTA). An Analysis of HTA and Reimbursement Procedures in EUnetHTA Partner Countries: Final Report. Accessed October 13, 2022.https://www.eunethta.eu/wp-content/uploads/2018/02/WP7-Activity-1-Report.pdf

- European Network for Health Technology Assessment. Process of Information Retrieval for Systematic Reviews and Health Technology Assessments on Clinical Effectiveness. Version 2.0. December 2019. Accessed October 13, 2022. https://www.eunethta.eu/wp-content/uploads/2020/01/EUnetHTA_Guideline_Information_Retrieval_v2-0.pdf

- Büchter RB, Weise A, Pieper D. Reporting of methods to prepare, pilot and perform data extraction in systematic reviews: analysis of a sample of 152 Cochrane and non-Cochrane reviews. BMC Med Res Methodol. 2021;21(1):240. DOI: 10.1186/s12874-021-01438-z

- Xu C, Yu T, Furuya-Kanamori L, et al. Validity of data extraction in evidence synthesis practice of adverse events: reproducibility study. BMJ. 2022;377:e069155. DOI: 10.1136/bmj-2021-069155

- Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. DOI: 10.1136/bmj.n71

- Scott AM, Forbes C, Clark J, Carter M, Glasziou P, Munn Z. Systematic review automation tools improve efficiency but lack of knowledge impedes their adoption: a survey. J Clin Epidemiol. 2021;138:80-94. DOI: 10.1016/j.jclinepi.2021.06.030

- Scott AM, Forbes C, Clark J, Carter M, Glasziou P, Munn Z. Systematic review automation tools improve efficiency but lack of knowledge impedes their adoption: a survey. J Clin Epidemiol. 2021;138:80-94. DOI: 10.1016/j.jclinepi.2021.06.030

- Hamel C, Kelly SE, Thavorn K, Rice DB, Wells GA, Hutton B. An evaluation of DistillerSR’s machine learning-based prioritization tool for title/abstract screening – impact on reviewer-relevant outcomes. BMC Med Res Methodol. 2020;20(1):256. DOI: 10.1186/s12874-020-01129-1

- Smela B, Myjak I, O’Blenis P, Millier A. PNS60 Use of Artificial Intelligence with DistillerSR Software in Selected Systematic Literature Reviews. Value Health. 2020;22:suppl:S92. DOI: https://doi.org/10.1016/j.vhri.2020.07.479

- Wang T, Lipska I, McAuslane N, et al. Benchmarking health technology assessment agencies-methodological challenges and recommendations. Int J Techol Assess Health Care. 2020:1-17. DOI: 10.1017/s0266462320000598

- Julian E, Gianfrate F, Sola-Morales O, et al. How can a joint European health technology assessment provide an ‘additional benefit’ over the current standard of national assessments? Insights generated from a multi-stakeholder survey in hematology/oncology. Health Econ Rev. 2022;12(1):30. DOI: 10.1186/s13561-022-00379-7

- European Network for Health Technology Assessment (EUnetHTA). An Analysis of HTA and Reimbursement Procedures in EUnetHTA Partner Countries: Final Report. Accessed October 13, 2022. https://www.eunethta.eu/wp-content/uploads/2018/02/WP7-Activity-1-Report.pdf

Download the PDF version of this business brief.

Frequently Asked Questions

What is a Health Technology Assessment (HTA) and why is it important?

A Health Technology Assessment (HTA) is a process used to evaluate the value of healthcare interventions (like pharmaceuticals, medical devices, etc.). Its purpose is generally to inform decisions made by governments, healthcare providers and insurers about market entry, pricing, or reimbursement for these interventions.

HTA is vital because it is intended to promote “an equitable, efficient, and high-quality health system.” Major public, individual health, and economic consequences flow from HTA findings.

How does the Systematic Literature Review (SLR) fit into the HTA process?

The Systematic Literature Review (SLR) is one of the primary information streams feeding into an HTA. It provides a complete synthesis of all relevant studies in a transparent, verifiable manner to reduce the risk of bias. The steps typically involved in an SLR for HTA include:

- Topic identification

- Search design, literature searching, screening, and retrieval

- Data extraction

- Assessment of evidence quality and bias risk

- Data synthesis/meta-analysis

- Formulation and dissemination of findings and recommendations

What are the key challenges HTA bodies face in conducting Systematic Literature Reviews?

The primary challenges to developing an HTA are:

- Information Overload: Literature searches often yield thousands of records, with on average only 2.9% being relevant. The volume of studies has risen sharply, making screening difficult.

- Time Constraints: Agencies often face short deadlines (e.g., two to three months in European agencies), and systematic reviews quickly become outdated, creating a need for rapid assessments and updates.

- Need for Transparency and Accuracy: Inconsistency, error, and inadvertent bias can be introduced when human reviewers apply criteria differently, especially during data extraction, which has been found to have an 85% error rate in some analyses.

How can automating the SLR process streamline HTA activities?

Automating aspects of the SLR process, particularly literature screening, retrieval, data extraction, and risk of bias assessment, can significantly improve HTAs by increasing efficiency and accuracy. Automation software like DistillerSR is designed to:

- Speed: Reduce the title/abstract screening burden (e.g., by a median of 40.6% in one study). Employ AI assisted data extration with human-in-the- loop oversight.

- Accuracy: Maintain a low margin of error, similar to or better than human reviewers, while avoiding manual entry errors.

- Transparency: Record inclusion/exclusion decisions and track all review activity to provide data for audits and reproducibility.

What should I consider when evaluating literature review software?

- Collaboration Features: Web-based platform that allows multiple internal and external (outsourced) team members to work concurrently on the same review with tracked activity.

- Audit Trails (Transparency): The software must automatically log every significant action.

- Regulatory Compliance Support: The vendor should explicitly address compliance with relevant regulations and guidelines.

- Data Security: Hosting environment (cloud/on-premise) must meet stringent security standards (e.g., ISO27001, SOC 2 certifications).

- Customization and Flexibility: The ability to create highly customized data extraction forms to capture specific data needed for HTA models (e.g., cost-effectiveness, specific adverse events, quality of life data).

- Advanced Features (AI/Machine Learning): Validated AI capabilities that enhance efficiency while maintaining human-in-the-loop oversight.

Related Resources

Case Study

With as many as 40,000 references per project, Medlior, a HEOR consultancy, used DistillerSR to more accurately and efficiently manage their literature reviews.

Business Brief

Information Overload Drives New Approaches to Managing HEOR Literature Reviews.

Webinar

How Information Overload is Driving New Approaches for HEOR Systematic Literature Reviews.